Current areas of research

AI Avatars

Recent advances in generative AI have made it possible to create realistic avatars that can be used in a variety of applications, from gaming to virtual reality. These avatars can be customized to look and behave like real people, and they can be used to create immersive experiences that are indistinguishable from reality.

Knowledge Graphs

It is well known that a neural network stores it's knowledge in the latent space of the model weights of a graph of neurons. Can we use the same techniques to capture and store the latent space of all inputs to the model?

Low-bit Models

Microsoft's BitNet showed that it is possible to train a non-trivial ternary language model. With recent advances in quantization-aware-training (QAT), how far can we push low-bit models?

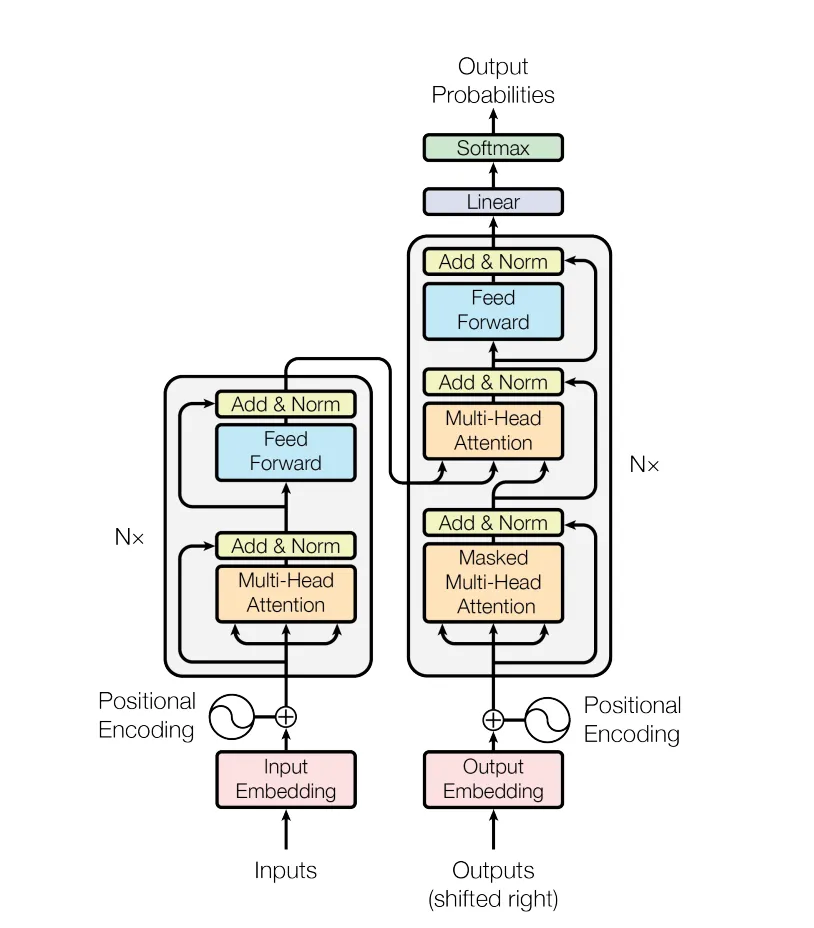

Transformers as components

Transformers are known to be universal function approximators. Large language models transform text into latent space embeddings. What other things can we transform into latent space, and can we network these encoders and decoders together?

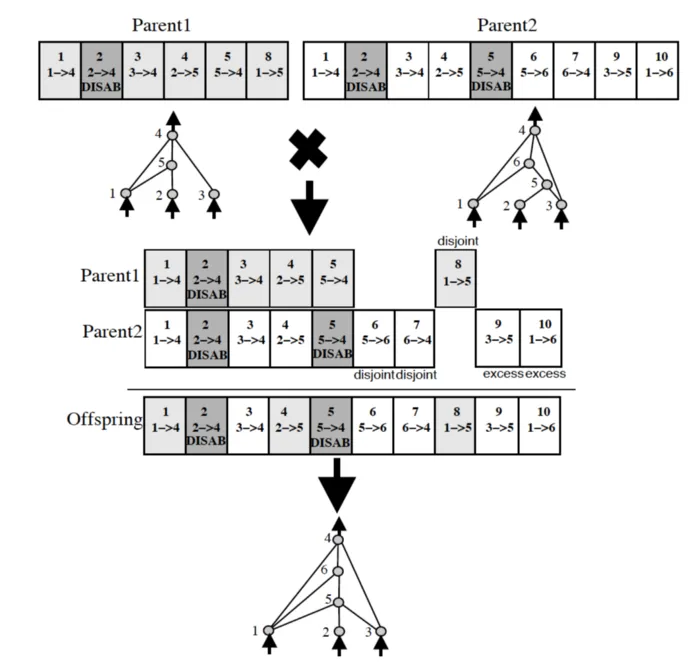

NEAT language models

Can we use Neuroevolution of Augmenting Topologies (NEAT) to evolve useful language models? Is NEAT a potential technique for escaping dependence on GPU compute?